The Rise of Deep Fakes

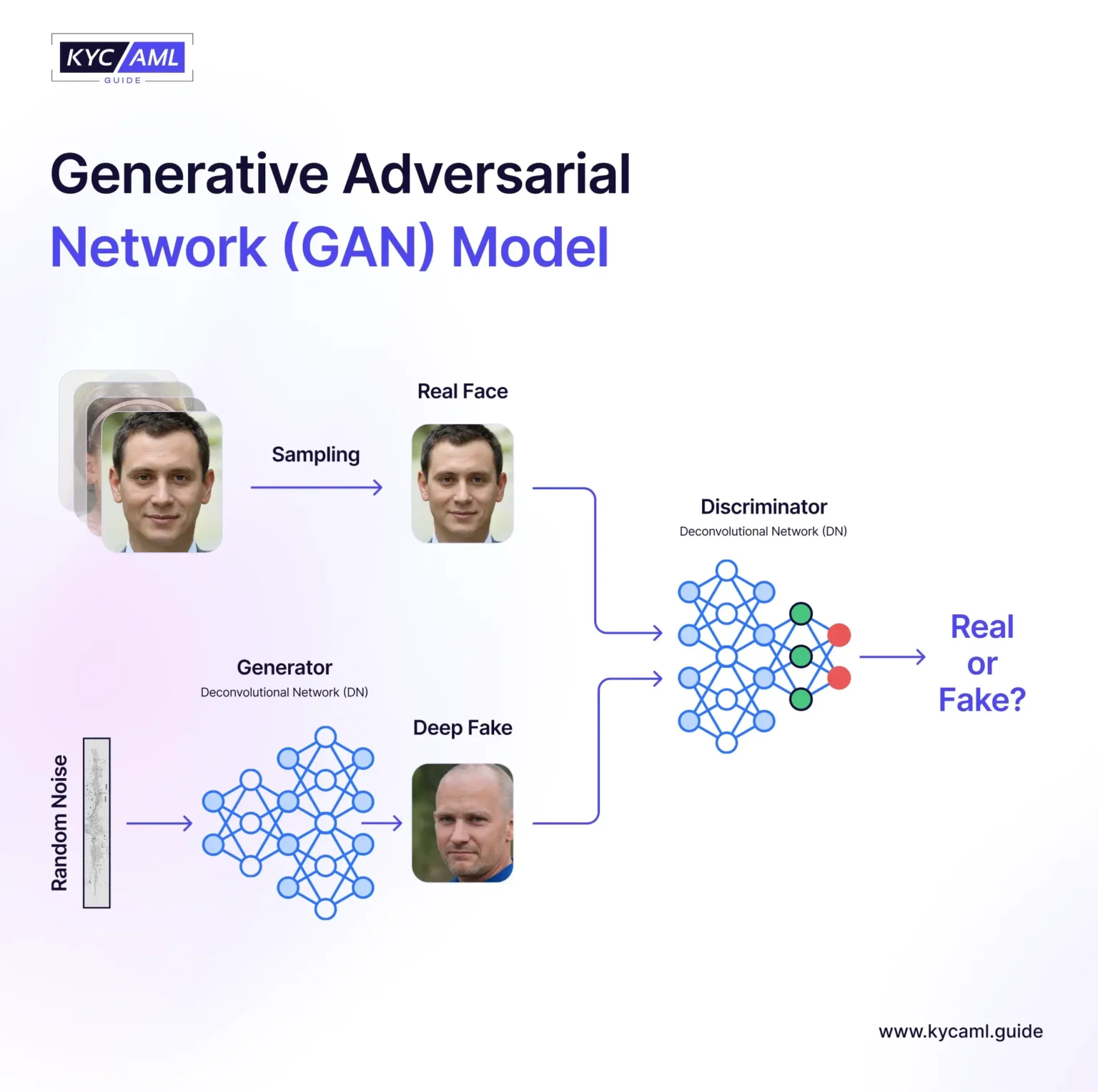

The term “deepfake” combines “deep learning” and “fake,” and not all deepfakes are malicious. Some are just for fun or business. Deepfakes, for example, allowed David Beckham to speak nine languages during a malaria awareness campaign and allowed Robert De Niro to appear younger in “The Irishman”. While the first was praised for its impact and the second one impressed audiences, neither one was a threat. Real dangers, however, appear when deepfakes are employed on purpose, as in Know Your Customer (KYC) and Anti-Money Laundering (AML) procedures. Consider a scenario in which a threat actor poses as a user to evade security measures or as a driver to authorize fraudulent transactions.

The fraudulent potential of deepfake technology has been increasingly highlighted by recent events, such as the spread of fake and candid photos of Taylor Swift on social media. Australian authorities also warned about deep fake scams after 400 people lost more than US$5.2 million on online trading platforms in 2023. In a scam on February 4, 2024, a Hong Kong multinational lost HK$200 million after fraudsters used deep fake technology to create compelling videos including a digitally recreated chief financial officer who ordered fraudulent money transfers.

Fraudulent and malicious use of Deep fake is on the rise in this area and poses significant risks to companies and financial institutions. According to MarketWatch, future losses could be even higher, as experts predict an annual increase of $2 billion (€1.4 billion) in identity fraud through generative AI.

Also Read: AI vs. Deepfakes: Ensuring Security and Compliance in a Digital World

Types of Generative AI Deepfake Attacks

Let’s understand some types of generative AI deep fake attacks.

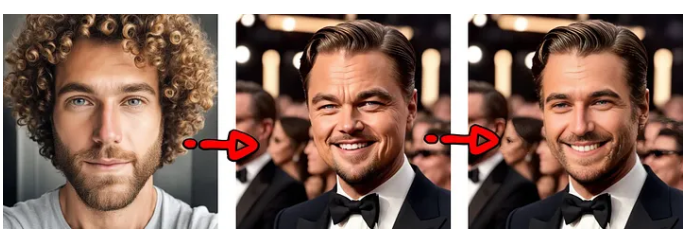

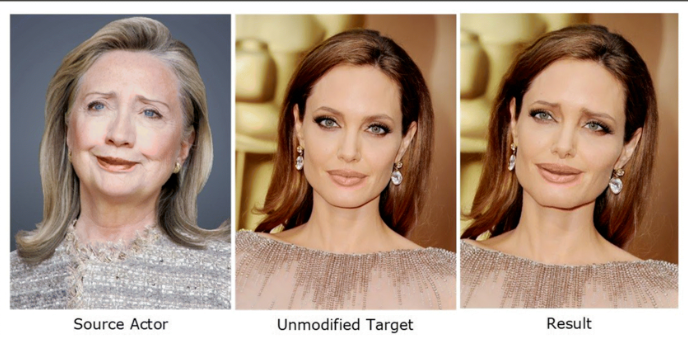

1. Face Swaps:

A form of synthetic media created with two inputs. They combine existing images, videos, or live streams and apply a different identity to the original feed in real-time. The result is a fake 3D video output that has been combined with multiple faces but remains intact with the biometric model of the real person. However, the resemblance is more than visually striking. Without proper defense, face matching can identify the output as a real person.

You’ve probably seen deep fakes like this on TV and social media, but they’re also being used for fraud: a scam in China involves a video trick to convince someone to hand over more than $600,000 in scam call to a friend

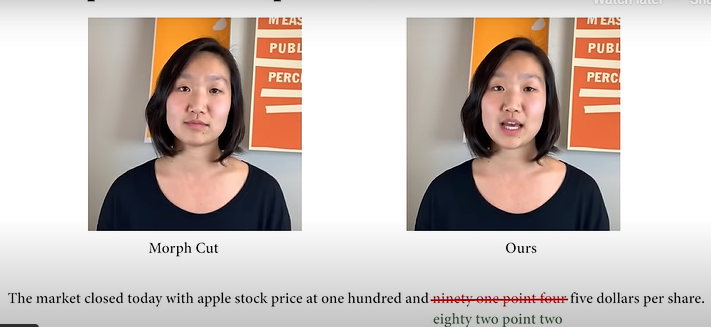

2. Re-enactments:

They are also known as ‘Puppet Master’ Deepfakes. In this technique, the facial expressions and movements of the person in the target video or image are controlled by the person in the source video. An artist sitting in front of the camera directs the facial movements and distortions in a video or photo. While facial transformation replaces the source identity with the target identity (identity manipulation), re-enactments involve associating facial expressions with one input at a time.

3. Voice Cloning

Another form of generative AI deep fake technology- one threat to the upcoming election is audio spoofing or voice cloning. This system can record audio clips of a person speaking and produce a voice that sounds exactly like that person – who can “say” whatever it is programmed to do. Two days before the 2023 Slovak elections, a hoax featuring the voice of Michal Šimečka, leader of the liberal Slovak Progressive Party, circulated on social media. The clip falsely suggested that the politician bought the votes of the country’s Roma minority in an attempt to rig the elections.

4. Synthetic Media Generation

It can create artificial content – images, videos, music, or audio – that never really existed. This opens the door to creating fictional scenarios or events. For example, deepfake photos of non-existent people have been used to create fake accounts, which are then used in information operations, or to spread disinformation.

5. Text-based Deep Fakes:

AI-generated texts, such as articles, social media posts, or even emails, mimic a specific individual’s writing style, potentially leading to the creation of misleading content.

6. Object Manipulation: ‘

In addition to faces and bodies, generative AI deepfake can manipulate objects in videos, changing their appearance or behavior. This can lead to a fraudulent presentation of events or circumstances.

7. Hybrid Deep Fakes:

Combine techniques to create more sophisticated and convincing deep fakes, such as facial changes with voice or body movements.

Bottom Line

Deepfake technology uses artificial intelligence and deep learning to produce highly realistic and immersive multimedia content with positive applications in audio restoration, movie updating, and education. However, as technology advances rapidly, its generative AI deepfake attack has become a major concern, posing risks to individuals and organizations. Effective deepfake detection involves a combination of human intuition and advanced tools, which identify anomalies such as irregular sound patterns and unnatural shadows. To combat counterfeiting threats, organizations must establish comprehensive policies, protect intellectual property, employ detection tools, and remain vigilant against emerging challenges.